Artificial intelligence has moved with unusual speed from technological novelty to institutional infrastructure. What was, until recently, a tool for experimentation is now embedded in media houses, universities, corporations and public administration systems. For development organizations, the question is no longer whether AI exists, but whether it should be incorporated into their work — particularly in communication and storytelling.

The instinctive response within much of the development sector has been caution. NGOs operate on trust. They represent vulnerable communities. Their stories carry ethical weight. A misstep in communication can erode credibility built over decades. In that context, hesitation is understandable.

However, the communications environment has changed. Donors demand data-backed narratives. Policymakers expect clarity and timeliness. Social media compresses attention spans. Field teams generate volumes of programme data that often remain under-communicated due to capacity constraints. The development sector now competes in a crowded information ecosystem.

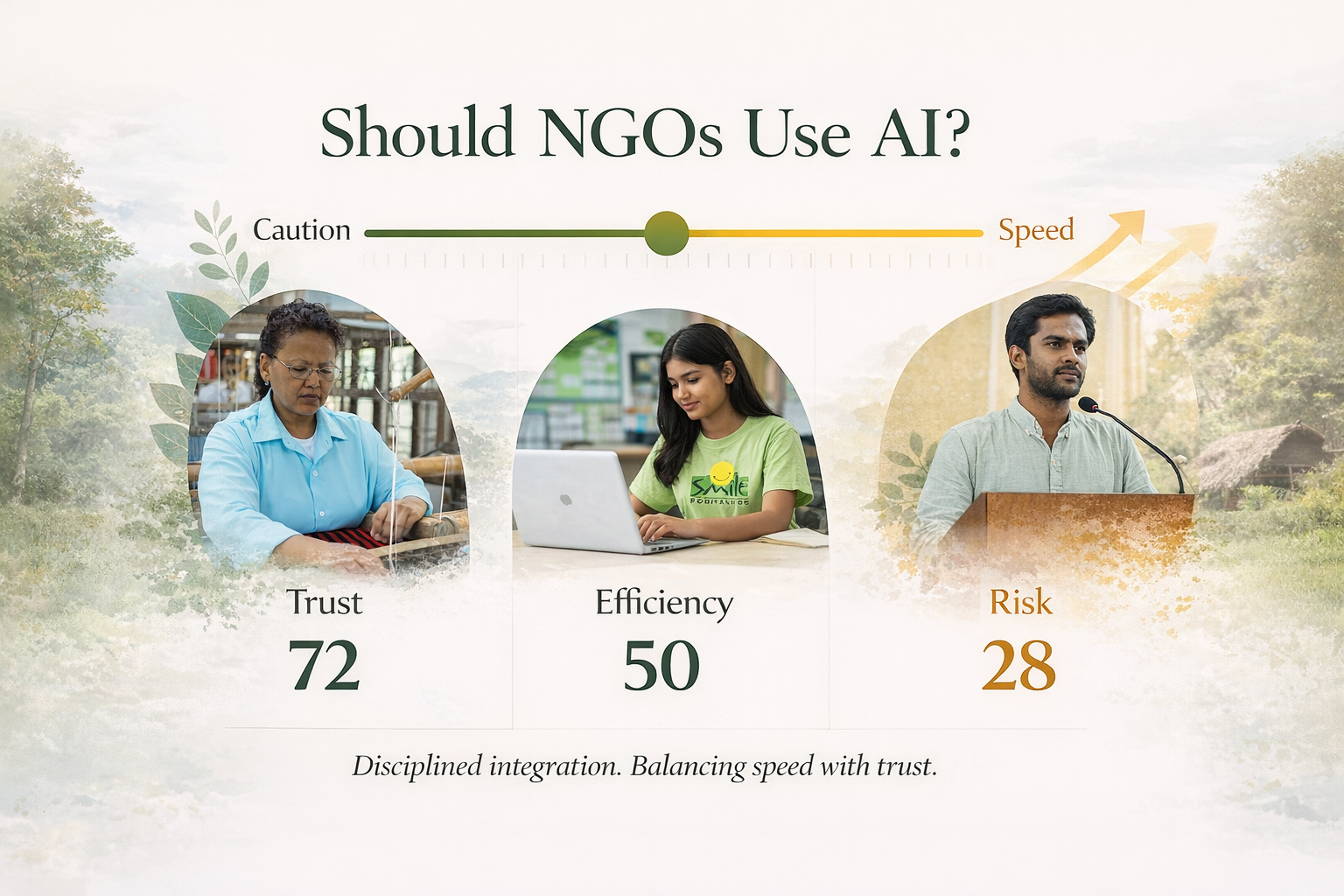

The more productive question, therefore, is not whether NGOs should use AI, but how they should do so without compromising integrity.

NGO AI Decision Simulator

You are the Communications Head. Make careful decisions.

Trust: 50

Efficiency: 50

Credibility: 50

Risk Level: 20

The Case for Adoption

Most NGOs operate with small communications teams and limited budgets. Translating monitoring and evaluation reports into readable narratives requires time. Converting technical research into public-facing analysis demands specialised skill. Producing multilingual content stretches already thin resources.

AI systems can assist in drafting articles, summarising research, structuring impact reports and translating material into accessible formats. Used responsibly, they can reduce mechanical workload and allow communications teams to focus on strategy, nuance and ethical oversight.

In this sense, AI becomes an efficiency tool rather than a creative replacement. It supports clarity; it does not generate authenticity.

For organizations engaged in sectors such as education, health, skill development, women’s empowerment and more (all with heavy data reliance), the ability to synthesise data quickly and communicate consistently can strengthen advocacy and fundraising efforts alike.

However, efficiency cannot become the sole metric of value.

The Risks of Uncritical Use

AI systems are probabilistic. They generate responses based on patterns, not lived experience. They can produce plausible but inaccurate statistics. They may oversimplify complex social realities. In some instances, they replicate biases embedded in training data.

For NGOs, the risks are specific and consequential.

First, data integrity. Publishing AI-generated statistics without verification can undermine credibility. Development communication depends on evidence; errors are costly.

Second, narrative authenticity. Beneficiary stories cannot be fabricated, embellished or reconstructed through automated systems. The dignity of communities requires consent, accuracy and human engagement.

Third, confidentiality. Field documentation may contain sensitive personal information. Uploading such material to unsecured platforms carries privacy risks.

These concerns do not argue for abstention. They argue for governance.

Tools and Their Appropriate Use

Several AI tools have emerged as particularly useful in communications workflows, though each carries limitations.

Large language models such as ChatGPT or Claude can assist in drafting blogs, summarising policy documents and restructuring technical material. Their strength lies in linguistic clarity and structural coherence. Their weakness lies in potential factual inaccuracy. All outputs must be independently verified before publication.

Design platforms such as Canva’s AI features or Adobe Firefly can accelerate the production of infographics and campaign creatives. They democratise design capacity. Yet there is a risk of visual genericism and oversimplification. Visual storytelling must preserve context and seriousness.

Data visualisation platforms such as Datawrapper or Flourish allow NGOs to present impact metrics interactively. These tools strengthen credibility when used carefully. However, visual distortion of data (intentional or accidental), can mislead audiences if context is omitted.

Video-editing tools such as Descript simplify transcription and editing, making multimedia storytelling more accessible. The caution here lies in overuse of synthetic voiceovers or excessive editing that alters meaning.

Finally, documentation tools such as Notion AI or Otter.ai can streamline internal operations by summarising meetings and reports. While efficient, they require careful handling of sensitive information and human review to ensure accuracy.

The common thread across these tools is assistance, not substitution.

Establishing Ethical Guardrails

If NGOs are to integrate AI responsibly, certain principles must be institutionalised.

Verification should be mandatory. No statistic generated through AI should appear in public communication without cross-checking against primary sources.

Transparency should guide internal practice. Teams must understand when and how AI is being used. While public disclosure may not always be required, internal accountability prevents misuse.

Dignity must remain central. AI should not simulate community voices or recreate experiences of trauma. Human engagement remains indispensable.

In essence, AI can support the articulation of impact, but it cannot replace proximity to the field.

The Strategic Imperative

The development sector increasingly competes not only for funding but for narrative authority. Think tanks, consulting firms and multilateral agencies produce rapid, data-driven commentary. NGOs risk marginalisation if they cannot match the pace of discourse.

Responsible AI adoption can help close that gap. It enables faster response to policy developments, clearer reporting to donors and broader dissemination of programme learnings.

However, speed without scrutiny is dangerous.

The challenge before NGOs is to define the terms of engagement before technological momentum defines them. This requires capacity building, internal guidelines and a commitment to ethical communication standards.

A Balanced Conclusion

Artificial intelligence is neither a threat to NGO storytelling nor a panacea for communications challenges. It is a tool. Like any tool, its impact depends on how it is wielded.

The development sector’s comparative advantage has always been credibility grounded in lived engagement. If AI is integrated with discipline meaning verified, transparent and dignity-centred, it can enhance that credibility.

If adopted uncritically, it can weaken it.

The choice is not between tradition and technology. It is between governance and drift.

For NGOs navigating a rapidly evolving information landscape, the prudent course is not avoidance, but thoughtful integration.